Black Box AI Explained: How It Works and Why It Matters

Bambam Kumar Yadav / 4 weeks - 0

- 11 min read

What is Black Box AI?

The black box Artificial intelligence (AI) refers to systems of artificial intelligence whose inner workings are secretive and hard for humans to understand. These AI models function in ways that even the developers might not completely understand, in contrast to traditional programming, where programmers give clear instructions. The way we create, implement, and oversee AI technology will be significantly impacted by this opacity. When we discuss artificial intelligence (AI), we frequently refer to systems that learn from massive volumes of data instead of using explicit programming. The capabilities of AI have been transformed by this data-driven approach, but trust knowledge representation and explainability issues have also been raised.

How Black Box AI Models Work?

Fundamentally, black box AI systems work by analysing data patterns using complex mathematical procedures. This is a condensed description of how various AI models work:

- Data Collection: The AI system receives large quantities of data related to the problem it's designed to solve.

- Training: The system analyzes this data to identify patterns and relationships, adjusting its internal parameters to improve performance on specific tasks.

- Prediction or Decision-Making: Once trained, the AI applies what it has learned to make predictions or decisions when presented with new data.

Modern AI systems, especially deep learning networks, include millions or even billions of variables that interact in complicated ways, which leads to complexity. Because of this complexity, it is challenging to communicate AI conclusions in a way that is understandable to humans.

Before delving into the technical facets of AI creation, students enrolled in AI courses in Noida or elsewhere must grasp these foundational ideas.

Why Black Box AI Matters

The significance of Black Box AI extends beyond technical curiosity. Here's why this concept matters in our increasingly AI-driven world:

- Trust and Adoption: People may be reluctant to trust or use an AI system if they are unsure of how it makes judgments. This is especially true in high-stakes fields where understanding the logic underlying AI suggestions is essential, such as healthcare, finance, or autonomous driving.

- Ethical Implications: When AI systems make decisions that have an impact on people's lives, the ethical implications of artificial intelligence become a critical concern. It is difficult to spot and resolve any potential biases or unfair outcomes ingrained in these systems in the absence of transparency.

- Regulatory Compliance: Explainability is increasingly becoming a legal requirement in many jurisdictions as governments around the world create regulations for AI. If businesses using black box AI are unable to sufficiently explain AI decisions, they may soon encounter compliance issues.

- Debugging and Improvement: Black box AI's opacity makes it challenging for developers to find and address the root causes of unexpected or undesirable outcomes.

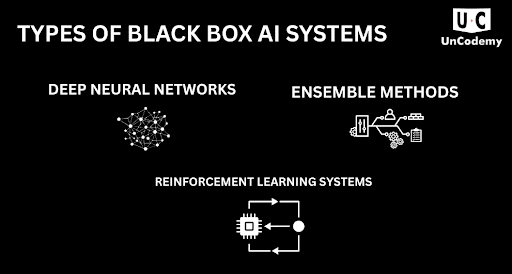

Types of Black Box AI Systems

Several types of AI systems are typically considered "black boxes" due to their complexity and lack of interpretability:

- Deep Neural Networks: These complex AI models have several layers of interconnected nodes that resemble the structure of the human brain. Neural networks are extremely powerful, but they are notoriously hard to understand.

- Ensemble Methods: These methods enhance overall performance by combining several AI models. The decision-making process is opaque due to the intricacy of the interactions between models.

- Reinforcement Learning Systems: Through trial and error, these AI systems develop strategies that may be very successful but challenging for humans to comprehend or anticipate.

These models are likely to be encountered by students in an Noida AI course as they move from fundamental to more complex AI concepts.

Efforts to Make Black Box AI More Transparent

Recognizing the challenges posed by opacity in artificial intelligence, how it works, researchers and practitioners are developing methods to make Black Box AI more interpretable:

- Explainable AI (XAI): The goal of this new field is to develop AI systems that can explain their choices in a way that is understandable to humans. XAI methods seek to strike a balance between transparency and performance.

- Model-Agnostic Explanation Methods: These methods examine the AI system's inputs and outputs to produce explanations, but they treat it as a real black box. This group includes methods such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations).

- Inherently Interpretable Models: Even though they might perform marginally worse than intricate Black Box systems, some researchers support creating simpler, easier-to-understand AI models from the ground up.

Explainable AI modules are now a common feature of comprehensive AI courses in Noida, demonstrating the field's increasing significance.

Real-World Applications of Black Box AI

Despite the challenges, Black Box AI is powering innovations across numerous industries:

- Healthcare: AI systems can occasionally outperform human experts in the analysis of medical images to identify diseases. Naturally, though, medical professionals and patients are curious about how the AI arrived at its diagnosis.

- Finance: Black box AI is used by banks and other financial organisations for algorithmic trading, fraud detection, and credit scoring. These systems' opacity calls into question accountability and fairness.

- Autonomous Vehicles: Complex AI systems are necessary for self-driving cars to navigate and make snap decisions. It is essential to comprehend how these systems operate to maintain safety and foster public confidence.

- Content Recommendation: Advanced AI is used by social media websites and streaming platforms to suggest content. There have been worries about "filter bubbles" and the reinforcement of preexisting biases due to the opaqueness of the process used to create these recommendations.

An AI course in Noida can give professionals who want to learn more about these applications practical experience with real-world AI systems.

Future of Black Box AI

The future of black box AI is probably going to involve finding a balance between explainability and performance as artificial intelligence becomes more complex. The following are some new trends:

- Regulatory Frameworks: Prepare for more thorough regulations that demand AI systems have some explainability, particularly in high-risk applications.

- Hybrid Approaches: Hybrid systems that combine the strength of intricate black box models with easier-to-understand components are probably going to be developed.

- AI Literacy: General AI literacy will increase as AI becomes more widely used, enabling more people to comprehend the fundamentals of these systems' operation, even though the technical specifics will still be intricate.

After taking an AI course in Noida, students will be prepared to handle this changing environment and support ethical AI advancement.

Conclusion: Illuminating the Black Box

The black box world of AI represents both the huge potential and important challenges of contemporary artificial intelligence. Even though these systems are capable of producing amazing outcomes, their opacity poses significant ethical, trust, and accountability issues.

Finding explanations for AI decisions is becoming more and more crucial as we continue to incorporate AI into important facets of our lives and society. The future of artificial intelligence will be shaped by initiatives to shed light on the "black box," whether through enhanced regulatory frameworks or technical advancements in explainable AI.

Pursuing an AI course in Noida or looking into online learning resources can be a great place to start for people who are interested in joining this fascinating field and are captivated by these advancements. Anyone navigating our increasingly AI-driven world needs to understand how AI operates; it is no longer just for experts.

Frequently Asked Questions (FAQs)

Q: What exactly makes an AI system a "Black Box"?

A: An AI system becomes a "Black Box" when its internal decision-making processes are too complex to be easily understood by humans.

Q: Can Black Box AI be dangerous?

A: Black Box AI isn't inherently dangerous, but its lack of transparency makes it harder to identify biases and errors in decision-making.

Q: Is all AI considered a Black Box?

A: No, simpler AI models like decision trees are quite interpretable; the black box challenge mainly affects advanced techniques like deep learning.

Q: How can I learn more about Black Box AI?

A: Taking an AI course in Noida is the best way to gain hands-on experience with these sophisticated AI systems.

Q: Will Black Box AI ever become fully transparent?

A: Complete transparency for complex AI systems is challenging, but significant progress is being made in developing explainable AI techniques.