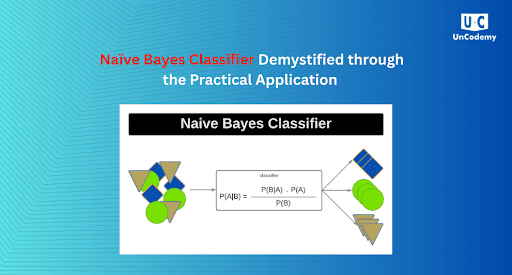

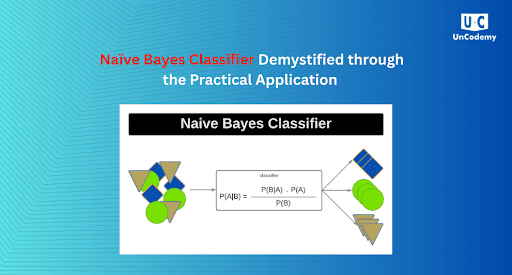

Naïve Bayes Classifier Demystified through the Practical Application

Bambam Kumar Yadav / 4 weeks - 0

- 11 min read

This article aims to demystify the Naïve Bayes classifier by explaining its theoretical foundations and illustrating its effectiveness through practical application.

Understanding the Theoretical Foundation

At the core of the Naïve Bayes classifier lies Bayes’ Theorem, a fundamental rule in probability theory that describes how to update the probability of a hypothesis as more evidence or information becomes available:/p>

P(H|E) = \frac{P(E|H) \cdot P(H)}{P€}

Where:

Is the posterior probability: the probability of hypothesis given the evidence ,

Is the likelihood: the probability of observing the evidence assuming the hypothesis is true,

Is the prior probability of the hypothesis,

Is the probability of the evidence.

A quintessential example of Naïve Bayes in action is email spam detection.

Why Naïve Bayes? Key Advantages

- Simplicity: It’s easy to implement and understand, making it suitable for quick prototyping.

- Speed: It is computationally efficient and scales well with large datasets.

- Robustness to Irrelevant Features: Due to its additive nature, irrelevant attributes have minimal impact.

- Effective with Small Datasets: Performs well even with limited training data.

- Practical Application: Email Spam Filtering

A quintessential example of Naïve Bayes in action is email spam detection.

Step 1: Training the Classifier

- Given a labeled dataset of emails categorized as “spam” or “ham” (non-spam), the classifier learns:

- The frequency of each word in spam vs. ham emails,

- The overall probability of a message being spam or ham.

Step 2: Computing Probabilities

- When a new email arrives, the algorithm calculates:

- P(\text{Spam} | \text{Words}) \propto P(\text{Spam}) \cdot \prod_{i=1}^{n} P(w_i | \text{Spam})

- Where are the words in the email. It does the same for the ham class and chooses the class with the higher probability.

Step 3: Classifying the Email

- The email is classified as spam if:

- P(\text{Spam} | \text{Words}) > P(\text{Ham} | \text{Words})

- This simple yet powerful process allows email service providers to automatically and efficiently filter out unwanted messages.

The utility of Naïve Bayes extends far beyond spam detection:

- Sentiment Analysis: Classifying text (e.g., reviews or tweets) as positive, negative, or neutral.

- Document Categorization: Automatically tagging news articles into topics such as politics, sports, or technology.

- Medical Diagnosis: Predicting diseases based on reported symptoms.

- Fraud Detection: Identifying anomalous transactions in banking.

Limitations and Considerations

While Naïve Bayes is a valuable tool, it is important to recognize its limitations:

- The independence assumption is rarely true in real-world data.

- Probability estimates can be unreliable when features are correlated.

- It may underperform when the data contains complex patterns better captured by more advanced models (e.g., decision trees or neural networks).

- Despite these limitations, Naïve Bayes is often used as a strong baseline model in many machine learning pipelines.

Naïve Bayes Algorithm

Naïve Bayes is a supervised machine learning algorithm used for classification. It’s based on Bayes’ Theorem, which calculates the probability of a class based on prior knowledge.

The term “naive” comes from its assumption that all features are independent—which is rarely true in real life but still works well.

How It Works:

- Learn from data to calculate how likely each feature is for each class.

- Applies Bayes’ Theorem to compute the probability of each class given the input.

- Choose the class with the highest probability.

Example Use Cases:

- Spam detection

- Sentiment analysis

- Document classification

Pros:

- Fast and easy to use

- Works well with text data

- Good even with small datasets

Cons:

- Assumes features are independent

- Not suitable for complex patterns

Naïve Bayes in Machine Learning

Naïve Bayes is one of the basic algorithms used in Machine Learning (ML), especially for classification tasks. In ML, we teach machines to learn from data and make predictions or decisions without being explicitly programmed.

Where It Fits in ML:

Naïve Bayes belongs to the category of supervised learning, where:

We provide labeled data (input + correct output),

The model learns patterns,

Then it can predict the correct class for new, unseen data.

Example: Email Spam Filter

In ML:

You give the algorithm examples of spam and non-spam emails,

It learns the patterns (e.g., spam emails often contain words like “win”, “free”, etc.),

Then it can classify future emails automatically.

Why Use Naïve Bayes in ML?

Easy to train and understand

Performs well on text data

Great for quick, baseline models

Common ML Applications with Naïve Bayes:

Email spam detection

Sentiment analysis of reviews

News categorization

Language detection

Naive Bayes to Data Science Success – Start Your Journey in Noida

Understanding algorithms like Naive Bayes is just the beginning of a rewarding career in Data Science. As one of the foundational tools in machine learning, Naive Bayes introduces you to how machines can learn from data, classify information, and make accurate predictions.

At our Data Science Training Course in Noida, you’ll go far beyond the basics:

Learn not just Naive Bayes, but also advanced algorithms like Decision Trees, Random Forests, SVM, and Neural Networks.

Master data cleaning, exploratory data analysis, and feature engineering—critical steps in real-world projects.

Work on live case studies across industries like healthcare, finance, and e-commerce.

Understand how machine learning fits into the larger Data Science pipeline—from data collection to model deployment.

Why This Course Stands Out:

Practical training with real datasets

Step-by-step algorithm breakdowns

Tools covered: Python, Pandas, Scikit-learn, Power BI, Tableau

Build your own ML models from scratch

Industry-recognized certification

Whether you're a student, a working professional, or someone ready for a career shift, this course in Noida is your gateway into the booming world of AI and Data Science.

Learn. Apply. Grow.

Start with Naive Bayes—graduate with the confidence to build end-to-end data solutions.

Enroll now! Seats are filling fast.